How Wispbit MCP Solved My AI Code Quality Problem

- 8 min read

I use AI coding agents a lot these days. Claude Code, Zencoder, whatever works. They write code fast, but like any code, someone needs to check it.

When I review human-written code, I’m looking for bugs or bad architecture. With AI code, the logic is usually fine. But it doesn’t know how my team does things. Wrong variable names. Missing error handling. Security stuff we always do but the AI skipped.

I kept giving the same feedback on every review. That got old fast. Wispbit fixes this. It handles those repetitive checks for me.

The Problem

AI agents can be good when you set them up right. We use Zencoder and it works well with the right tools and instructions. But it still needs guidance.

The issue isn’t that AI writes broken code. It’s that AI doesn’t know your team’s way of doing things. It writes generic code that works but doesn’t fit your patterns. Maybe it writes auth logic that works fine, but your team always puts that in middleware. Or it doesn’t follow your service patterns.

So I kept saying the same things in reviews. “Use a FormRequest for validation.” “Put business logic in a Service class.” “Don’t query models in the controller.”

I wanted to stop repeating myself.

How Wispbit Works

Wispbit does something different. Instead of catching bugs after you write code, it learns how your codebase works and enforces those patterns as you go. It looks at your existing code and makes rules specific to your project.

The MCP (Model Context Protocol) part is what makes it useful. When Claude Code or Zencoder writes code, Wispbit checks it against your patterns before it goes into your repo.

So I spend less time explaining the same stuff in reviews.

What MCP Does

The MCP integration is where this gets good. Your AI agents can:

- Check your codebase rules before writing code

- Get feedback on code as they write it

- Get specific help on how to fix problems

- Learn your team’s patterns

It’s like having someone experienced check the AI’s work as it goes.

Instead of writing code that breaks your patterns, AI agents can check what you expect first.

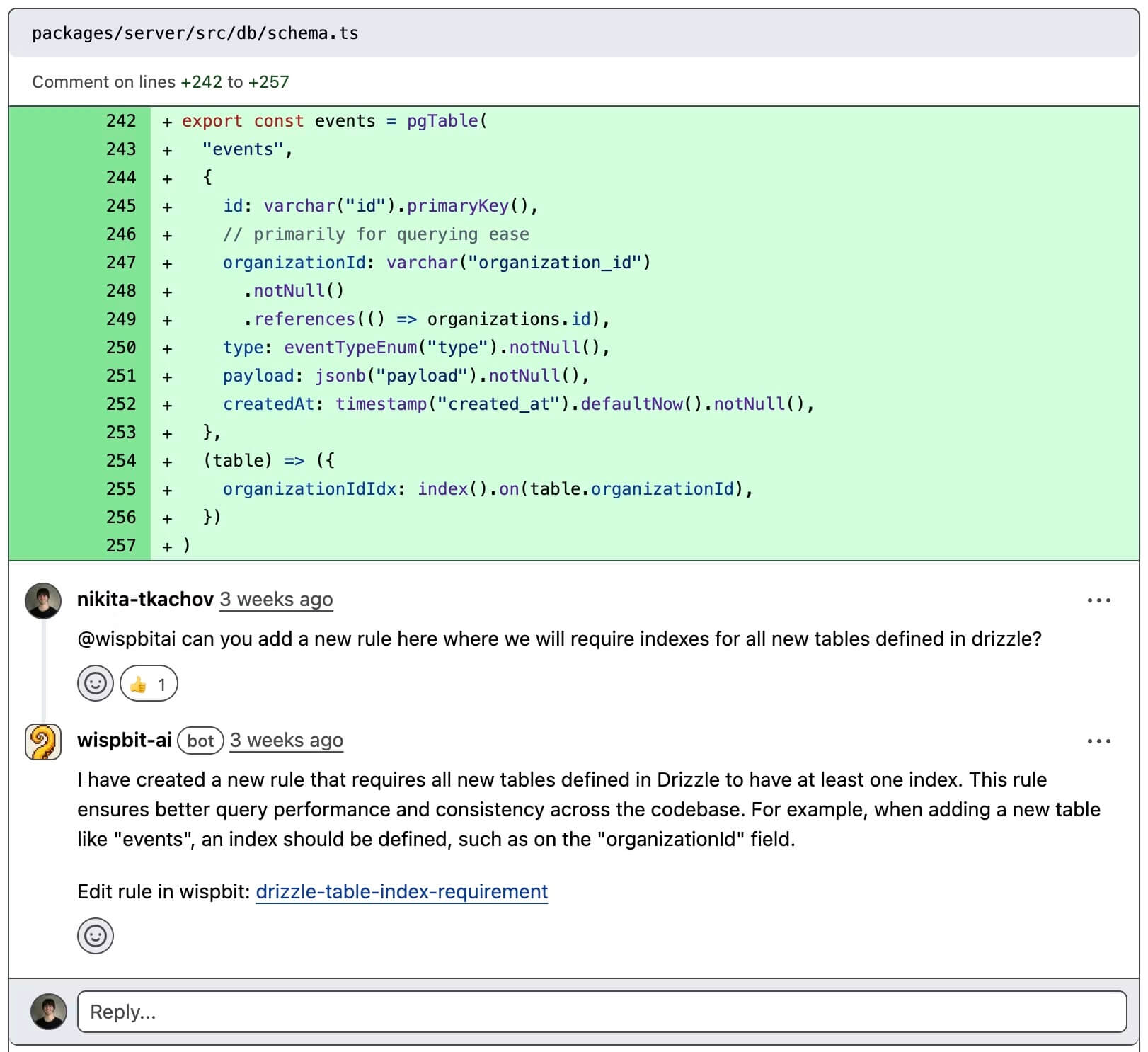

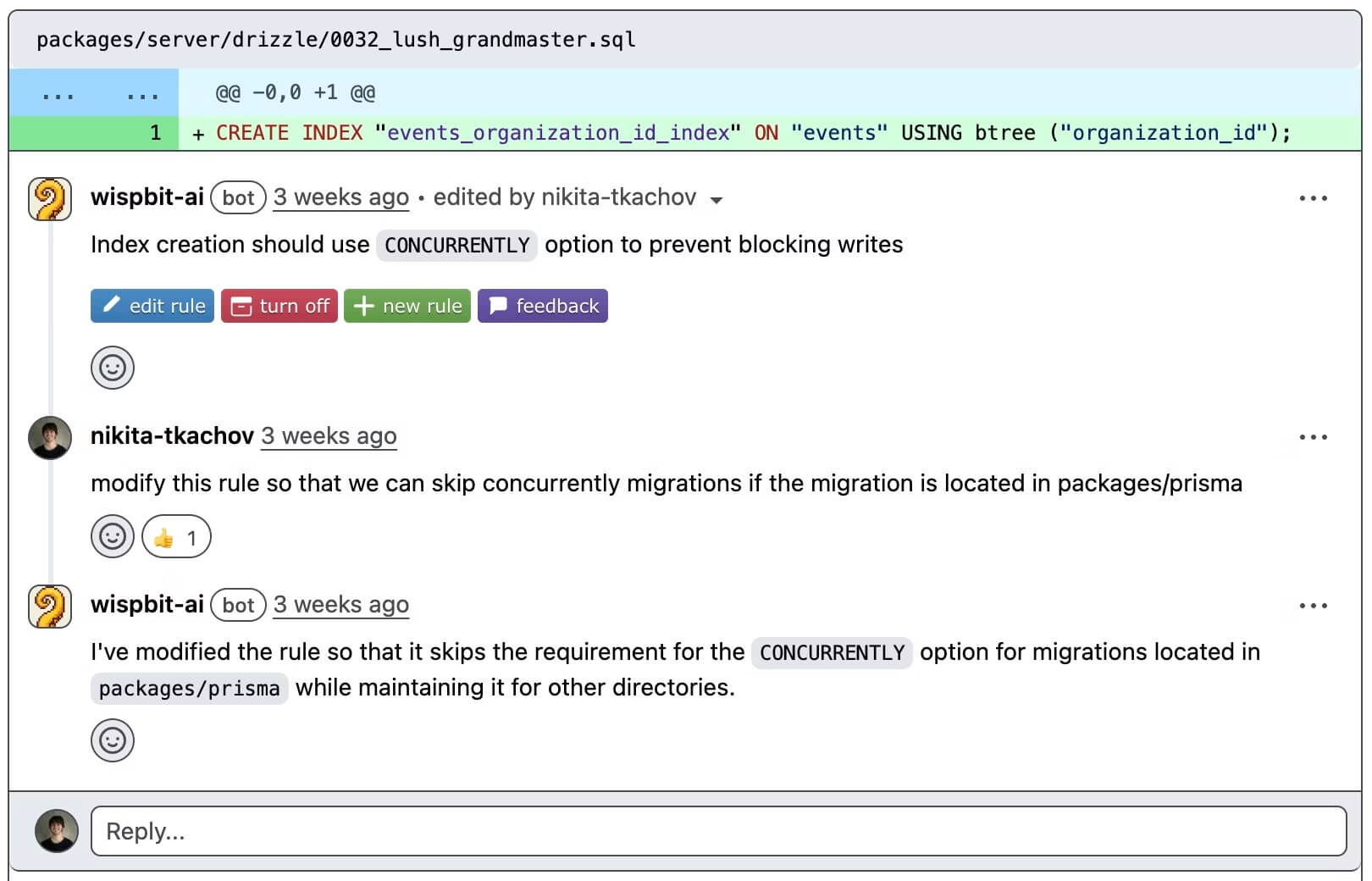

GitHub Integration

The GitHub part works well. When you open a pull request, it reviews your code against your rules. It’s not just generic analysis - it knows your actual codebase.

You can reply to Wispbit comments in GitHub to change rules. See a false positive? Comment why it’s wrong and it’ll fix the rule. You can ask questions about why it flagged something. Find a new pattern you want to enforce? Highlight the code and ask Wispbit to make it a rule.

It’s more interactive than regular linting tools.

Rules That Make Sense

I’ve tried lots of code quality tools. Most come with hundreds of generic rules that don’t fit your project. You spend more time making exceptions than fixing code.

Wispbit learns from your codebase and makes rules for your patterns. Here’s one it made for my Laravel project:

“Complex business logic should be implemented using Laravel Actions in the app/Actions/ directory rather than in controllers or models”

You won’t find that rule in ESLint or PHPStan. It’s specific to how we structure Laravel apps. And it shows examples from our actual code.

The rules cover:

- Code quality and readability

- Performance patterns

- Security requirements

- Design patterns and architecture

Each rule shows what to do and what not to do, using your actual code.

How I Use It Now

Here’s my workflow with Wispbit and AI agents:

Before writing code, AI agents check existing rules through MCP to understand what patterns we use.

During development, AI agents check code as they write it. No more writing a bunch of code just to find out it doesn’t follow our patterns.

In pull requests, Wispbit reviews everything and gives specific feedback. Not “this might be wrong” but “here’s exactly how to fix this to match your pattern.”

After reviews, I can adjust rules based on what we learn. The system gets better with each PR.

Open Source

I like that Wispbit is committed to open source. They have an open source repository and are transparent about how it works.

They also have a Discord community where you can get help and share patterns with other developers.

What Changed

Since using Wispbit, code reviews are faster and more focused. Instead of explaining the same patterns over and over, I’m talking about architecture and business logic - the stuff that matters.

The async part is huge for distributed teams. When someone opens a PR at 2 AM or while I’m in meetings, they get feedback right away. By the time I review, they’ve already fixed the pattern violations and formatting issues. I can focus on the logic instead of explaining why we use FormRequests.

New team members get up to speed faster because the rules are documented and enforced automatically. And AI-generated code follows our standards from the start.

The time savings are real. Wispbit says about 100 hours per year per engineer, and that sounds right.

Getting Started

If you have the same AI code quality issues I had, here’s how to start:

- Sign up at wispbit.com

- Connect your GitHub repos

- Set up the MCP server (instructions in the web app)

- Configure your AI agents to use the Wispbit MCP server

- Start with basic rules and let them grow

The setup is simple, and you’ll see results right away.

Final Thoughts

AI coding agents are powerful, but they need guardrails. Wispbit provides those guardrails in a way that’s collaborative and actually useful.

If you’re using AI agents for development and struggling with code quality consistency, try Wispbit. It solves a problem you might not have realized you could solve.

AI will power the future of coding, but it also needs to be quality-assured. Wispbit makes both possible.

Check out Wispbit and their documentation to learn more. Their Discord community is worth joining if you’re serious about AI development workflows.